In this section you can browse a selected, but not exhausted, list of recently finished and ongoing projects carried out by GraphicsVision.AI members.

| GV.AI partners | Project Acronym | Project Title | Scope | StartYear | EndYear | Synopsis | Keywords | Consortium | Website | Contact Person | Contact Person Email | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DFKI | ServiceFactory | Service Factory | National | 2016 | 2018 | The aim of this research project is to create an open platform and associated digital infrastructure in the form of interfaces and architectures that allow market participants to achieve simple, secure and fair participation at different levels of value creation. This platform will be designed for the recording, analysis and aggregation of data acquired from everyday used sensors (Smart Objects), as well as the conversion of these data into digital services (Smart Services), both technically and with regard to the underlying business and business model. Furthermore, for an initial technical product area, sports shoes will be considered, the possibility is exerted to extend these to cyberphysical systems which go beyond the existing possibilities of data collection (pure sensors). This is to create a broadly communicable demonstrator object that can bring the possibilities of smart services and intelligent digitization to the general public. In addition, the technical prerequisites as well as suitable business models and processes are created in order to collect the data under strict observance of the legal framework as well as the consumer and data protection for the optimization of product development, production and logistics chains. Here, too, an open structure will allow market participants to work with these data at different levels of added value. | platforms, sensors, IoT, cyberphysical systems, production, logistics, Industry4.0 | adidas, dresden elektronik, RWTH Aachen, Telekom, humotion, VDI | https://av.dfki.de/projects/servicefactory/ | Manthan Pancholi | manthan.pancholi@dfki.de |

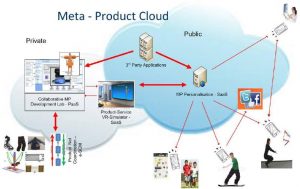

| DFKI | EASY-IMP | H2020 | 2013 | 2016 | EASY-IMP is a large initiative started in September 2013. It regroups 12 partners from seven different countries to develop new methodologies for designing and producing intelligent wearable products as Meta-Products. We propose a Cloud-enabled framework for Collaborative Design and Development of Personalised Products and Services, combining embedded (Internet of things paradigm) and mobile devices with facilities for joint open development of enabling downloadable applications. Recent developments in mobile, ubiquitous and cloud computing, as well as in intelligent wearable sensors, impose a new understanding of products and production models. A (Meta-) product is now a customer driver customisable entity that integrates sensory/computing units, which are in turn connected to the cloud, leading to a paradigm shift from mass production to intelligent, over-the-web configurable products. This evolution triggers an essential change of the whole design/production life cycle and opens totally new perspectives towards person-oriented production models that allow agile, small scale, and distributed production, whith a considerable impact on cost-effectiveness and ecology. However, product design and production is now becoming highly complex and requires interdisciplinary expertise. The lack of relevant and appropriate methodologies and tools constitutes a barrier in the wider adoption of the new production paradigm. Smart phones can be considered as the prime example of Meta-Products. Another emerging market with significant econnomic and societal influence, which exposes a high demand for customization, is the market of intelligent wearable products (clothes, shoes, accessories, etc.). Although in the last years new materials and intelligent on-body-sensors have been developed, there is a lack of an integrated Meta-Product approach. | wearable, sensors, mobile, ubiquitous, cloud, manufacturing, personalised | ATOS Spain, interactive wear, Université Lumière Lyon 2, Athens Technology Center, hypercliq, Institute of Biomechanics of Valencia, Smart Solutions Technologies S.L., Timocco Ltd, Sylvia Lawry Centre for MS Research e.V., The Human Motion Institute, University Rehabilitation Institute, Federation of the European Sporting goods Industry | https://av.dfki.de/projects/easy-imp/ | Norbert Schmitz | norbert.schmitz@dfki.de | |

| DFKI | Eyes Of Things | H2020 | 2015 | 2018 | The aim of the European Eyes of Things it to build a generic Vision device for the Internet of Things. The device will include a miniature camera and a specific Vision Processing Unit in order to perform all necessary processing tasks directly on the device without the need of transferring the entire images to a distant server. The envisioned applications will enable smart systems to perceive their environment longer and more interactively. The technology will be demonstrated with applications such as Augmented Reality, Wearable Computing and Ambient Assisted Living. Vision, our richest sensor, allows inferring big data from reality. Arguably, to be “smart everywhere” we will need to have “eyes everywhere”. Coupled with advances in artificial vision, the possibilities are endless in terms of wearable applications, augmented reality, surveillance, ambient-assisted living, etc. Currently, computer vision is rapidly moving beyond academic research and factory automation. On the other hand, mass-market mobile devices owe much of their success to their impressing imaging capabilities, so the question arises if such devices could be used as “eyes everywhere”. Vision is the most demanding sensor in terms of power consumption and required processing power and, in this respect, existing massconsumer mobile devices have three problems: power consumption precludes their ‘always-on’ capability, they would have unused sensors for most vision-based applications and since they have been designed for a definite purpose (i.e. as cell phones, PDAs and “readers”) people will not consistently use them for other purposes. Our objective in this project is to build an optimized core vision platform that can work independently and also embedded into all types of artefacts. The envisioned open hardware must be combined with carefully designed APIs that maximize inferred information per milliwatt and adapt the quality of inferred results to each particular application. This will not only mean more hours of continuous operation, it will allow to create novel applications and services that go beyond what current vision systems can do, which are either personal/mobile or ‘always-on’ but not both at the same time. Thus, the “Eyes of Things” project aims at developing a ground-breaking platform that combines: a) a need for more intelligence in future embedded systems, b) computer vision moving rapidly beyond academic research and factory automation and c) the phenomenal technological advances in mobile processing power. | IoT, camera, Computer Vision, intelligent, AI | Unvisersidad de Cadtilla-La Mancha (UCLM, Spain), Awaiba Lda (Portugal), Camba.tv Lts (EVERCAM, Ireland), Movidius Ltd (Ireland), Thales Communications and Security SAS (France), Fluxguide OG (Austria), nViso SA (Switzerland) | https://av.dfki.de/projects/eyes-of-things/ | Alain Pagani | alain.pagani@dfki.de | |

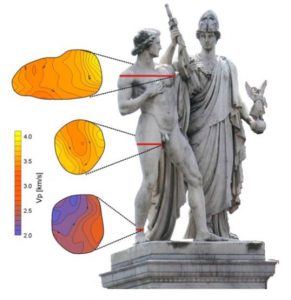

| DFKI | Marmorbild | National | 2016 | 2019 | Goal of the joint research project Marmorbild of the University Kaiserslautern, the Fraunhofer Institute (IBMT), and the Georg-August-University Göttingen is the validation of modern ultrasound technologies and digital reconstruction methods with respect to non-destructive testing of facades, constructions and sculptures built from marble. The proof of concept has been provided with prior research. The planned portable assessment system holds a high potential for innovation. In the future, more objects can be examined cost-effectively in short time periods. Damage can be identified at an early stage allowing for a target-oriented investment of efforts and financial resources. | ultrasound, reconstruction, sculptures, testing, quality | https://av.dfki.de/projects/marmorbild/ | Gerd Reis | gerd.reis@dfki.de | ||

| VICOMTECH | DOMO-FACE | Deep Learning Face Recognition in domotic gate way | Industry | 2017 | 2017 (2 months) | Development of a facial recognition system at home for Euskaltel clients. With the monitoring system of the house, the service alerts of possible people intrusions are given.Platform : orange pi. The migration to the platform was successfully done. Finally this was not included in the final product since they had to select the total number of services to be implemented | Face Recognition, Deep Learning .Domotic | Euskaltel | https://www.youtube.com/watch?v=B4m2RVFLbME | Gorka Marcos | tech.transfer@vicomtech.org |

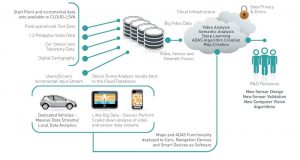

| VICOMTECH, LIST | CLOUD-SVA | Cloud Large Scale Video Analysis | H2020 | 2016 | 2018 | Cloud-LSVA will create Big Data Technologies to address the open problem of a lack of software tools, and hardware platforms, to annotate petabyte scale video datasets. The problem is of particular importance to the automotive industry. CMOS Image Sensors for Vehicles are the primary area of innovation for camera manufactures at present. They are the sensor that offers the most functionality for the price in a cost sensitive industry. By 2020 the typical mid-range car will have 10 cameras, be connected, and generate 10TB per day, without considering other sensors. Customer demand is for Advanced Driver Assistance Systems (ADAS) which are a step on the path to Autonomous Vehicles. The European automotive industry is the world leader and dominant in the market for ADAS. The technologies depend upon the analysis of video and other vehicle sensor data. Annotations of road traffic objects, events and scenes are critical for training and testing computer vision techniques that are the heart of modern ADAS and Navigation systems. Thus, building ADAS algorithms using machine learning techniques require annotated data sets. Human annotation is an expensive and error-prone task that has only been tackled on small scale to date. Currently no commercial tool exists that addresses the need for semi-automated annotation or that leverages the elasticity of Cloud computing in order to reduce the cost of the task. Providing this capability will establish a sustainable basis to drive forward automotive Big Data Technologies. Furthermore, the computer is set to become the central hub of a connected car and this provides the opportunity to investigate how these Big Data Technologies can be scaled to perform lightweight analysis on board, with results sent back to a Cloud Crowdsourcing platform, further reducing the complexity of the challenge faced by the Industry. Car manufacturers can then in turn cyclically update the ADAS and Mapping software on the vehicle benefiting the consumer. | CLOUD, ADAS, AUTOMOTIVE, AUTONOMOUS CAR | FUNDACION CENTRO DE TECNOLOGIAS DE INTERACCION VISUAL Y COMUNICACIONES VICOMTECH ES (DONOSTIA SAN SEBASTIAN) coordinator 935˙125.00 VALEO SCHALTER UND SENSOREN GMBH TECHNISCHE UNIVERSITEIT EINDHOVEN DUBLIN CITY UNIVERSITY IBM IRELAND LIMITED EUROPEAN ROAD TRANSPORT TELEMATICSIMPLEMENTATION COORDINATION ORGANISATION - INTELLIGENT TRANSPORT SYSTEMS & SERVICES EUROPE TOMTOM INTERNATIONAL BV COMMISSARIAT A L ENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES INTEMPORA TASS INTERNATIONAL MOBILITY CENTER BV INTEL DEUTSCHLAND GMBH UNIVERSITY OF LIMERICK INTEL CORPORATION | https://www.cloud-lsva.eu | Gorka Marcos | tech.transfer@vicomtech.org |

| VICOMTECH, LIST | VIDAS | Vision Inspired Driver Assistance Systems | H2020 | 2016 | 2019 | Road accidents continue to be a major public safety concern. Human error is the main cause of accidents. Intelligent driver systems that can monitor the driver’s state and behaviour show promise for our collective safety. VI-DAS will progress the design of next-gen 720° connected ADAS (scene analysis, driver status). Advances in sensors, data fusion, machine learning and user feedback provide the capability to better understand driver, vehicle and scene context, facilitating a significant step along the road towards truly semi-autonomous vehicles. On this path there is a need to design vehicle automation that can gracefully hand-over and back to the driver. VI-DAS advances in computer vision and machine learning will introduce non-invasive, vision-based sensing capabilities to vehicles and enable contextual driver behaviour modelling. The technologies will be based on inexpensive and ubiquitous sensors, primarily cameras. Predictions on outcomes in a scene will be created to determine the best reaction to feed to a personalised HMI component that proposes optimal behaviour for safety, efficiency and comfort. VI-DAS will employ a cloud platform to improve ADAS sensor and algorithm design and to store and analyse data at a large scale, thus enabling the exploitation of vehicle connectivity and cooperative systems. VI-DAS will address human error analysis by the study of real accidents in order to understand patterns and consequences as an input to the technologies. VI-DAS will also address legal, liability and emerging ethical aspects because with such technology comes new risks, and justifiable public concern. The insurance industry will be key in the adoption of next generation ADAS and Autonomous Vehicles and a stakeholder in reaching L3. VI-DAS is positioned ideally at the point in the automotive value chain where Europe is both dominant and in which value can be added. The project will contribute to reducing accidents, economic growth and continued innovation. | FUNDACION CENTRO DE TECNOLOGIAS DE INTERACCION VISUAL Y COMUNICACIONES VICOMTECH VALEO SCHALTER UND SENSOREN GMBH IBM IRELAND LIMITED COMMISSARIAT A L ENERGIE ATOMIQUE ET AUX ENERGIES ALTERNATIVES Honda Research Institute Europe GmbH INSTITUT FRANCAIS DES SCIENCES ET TECHNOLOGIES DES TRANSPORTS, DE L'AMENAGEMENT ET DES RESEAUX DUBLIN CITY UNIVERSITY TASS INTERNATIONAL MOBILITY CENTER BV TECHNISCHE UNIVERSITEIT EINDHOVEN TOMTOM INTERNATIONAL BV INTEL DEUTSCHLAND GMBH UNIVERSITY OF LIMERICK INTEMPORA KARLSRUHER INSTITUT FUER TECHNOLOGIE AKIANI XL INSURANCE COMPANY SE | https://www.vi-das.eu | Gorka Marcos | tech.transfer@vicomtech.org | |

| VICOMTECH | DESIREE | Decision Support and Information Management System for Breast Cancer | H2020 | 2016 | 2019 | Breast cancer is the most common type of cancer affecting woman in the EU. Multidisciplinary Breast Units (BUs) were introduced in order to deal efficiently with breast cancer cases, setting guideline-based quality procedures and a high standard of care. However, daily practice in the BUs is hampered by the complexity of the disease, the vast amount of patient and disease data available in the digital era, the difficulty in coordination, the pressure exerted by the system and the difficulty in deciding on cases that guidelines do not reflect. DESIREE aims to alleviate this situation by providing a web-based software ecosystem for the personalized, collaborative and multidisciplinary management of primary breast cancer (PBC) by specialized BUs. Decision support will be provided on the available therapy options by incorporating experience from previous cases and outcomes into an evolving knowledge model, going beyond the limitations of the few existing guideline-based decision support systems (DSS). Patient cases will be represented by a novel digital breast cancer patient (DBCP) data model, incorporating variables relevant for decision and novel sources of information and biomarkers of diagnostic and prognostic value, providing a holistic view of the patient presented to the BU through specialized visual exploratory interfaces. The influence of new variables and biomarkers in current and previous cases will be explored by a set of data mining and visual analytics tools, leveraging large amounts of retrospective data. Iintuitive web-based tools for multi-modality image analysis and fusion will be developed, providing advanced imaging biomarkers for breast and tumor characterization. Finally, a predictive tool for breast conservative therapy will be incorporated, based on a multi-scale physiological model, allowing to predict the aesthetic outcome of the intervention and the healing process, with important clinical and psychological implications for the patients. | Medical image, Decision Support system, Data analytics, e-Health | FUNDACION CENTRO DE TECNOLOGIAS DE INTERACCION VISUAL Y COMUNICACIONES VICOMTECH UNIVERSITY OF ULSTER INSTITUT NATIONAL DE LA SANTE ET DE LA RECHERCHE MEDICALE THE METHODIST HOSPITAL RESEARCH INSTITUTE ARIVIS AG MEDICAL INNOVATION AND TECHNOLOGY SINGLE MEMBER PC BILBOMATICA SA ASSISTANCE PUBLIQUE - HOPITAUX DE PARIS Exploraciones Radiológicas Especiales S.L. FUNDACION ONKOLOGIKOA FUNDAZIOA SISTEMAS GENOMICOS SL UNIVERSITY OF HOUSTON SYSTEM | https://www.desiree-project.eu | Gorka Marcos | tech.transfer@vicomtech.org |

| VICOMTECH | CODICE | Computer vision for dimensional inspection | Industry | 2017 | 2019 | Development of fast 3D reconstruction software approacches combined with different industrial set-ups in order to enable the designe of a new generation of Industrial inline inspection systems | inline inpection, 3d reconstruction | CIE AUTOMOTIVE, EKIDE, SARIKI METROLOGIA, MICRODECO, ETXETAR | Gorka Marcos | tech.transfer@vicomtech.org | ||

| CCG | AGATHA | Intelligent system for surveillance and crime control on open sources of information. | National | 2016 | 2019 | This project aims to develop a tool intended for the criminal investigative police and intelligence services, which will aid in the gathering of criminal practice evidences. In other words, a platform that resorts to all the information openly available and carry out an automatic analysis of it will be developed. These sources of information include social networks, forums, images, blogosphere information, sources of information present on the web, and broadcast media on the web such as radio or TV. For such, modules and components will be developed that allow the execution of various tasks, to highlight the acquisition of data (based on crawling algorithms, data mining and ETL tools), analysis of video/image, audio/voice, text and biometrics (which allow the detection of specific images, facial and speaker identification, translation information, etc.), classification and semantic segmentation (for detection of topics and dialogues and extraction of entities). Data management, organization and visualization (which will incorporate criminal ontologies, will retrieve information from the databases and visual analysis of large amounts of data), security mechanisms will be developed to ensure compliance with specific procedures inherent to research activities and full traceability of the stored information. | Surveillance, Crime control, Machine (Deep) Learning, Pattern Recognition, Computer Vision, Semantic Scene Understanding | Compta Business Solutions, SA; Computer Graphics Center; Voiceinteraction, SA; University of Evora | Center for Innovation in Information Technology (CITI) | https://www.ccg.pt/projetos/agatha-sistema-inteligente-analise-fontes-informacao-abertas-vigilanciacontrolo-criminalidade/ | Miguel Guevara | |

| CCG | HeritageCARE | Monitoring and Preventive Conservation of Historic and Cultural Heritage | H2020 | 2016 | 2019 | The preservation of building of historical and cultural value in south-west Europe is currently not a regular organized activity; it only occurs when serious problems arise. In order to face this urgent situation, The HeritageCARE project aims to implement a management system for the preventive conservation of the historical cultural heritage based on a series of services provides by a nonprofit organization created in Spain, France and Portugal. The project is the first joint strategy of preventive heritage preservation in south-west Europe. | Preventive conservation; Computer Vision; Augmented Reality; Cultural Heritage, Management Systems | From Portugal: Universidade do Minho; Direção Regional de Cultura do Norte; Computer Graphics Center. From Spain: Universidad de Salamanca; Instituto Andaluz del Patrimonio Histórico. From France: University Clermont Auvergne; Université de Limoges. | http://heritagecare.eu/ | Miguel Guevara | |

| CCG | CHIC | Cooperative Holistic view on Internet and Content | National | 2017 | 2020 | The main objective of the “CHIC – Cooperative Holistic view on Internet and Content” mobilizing project is to develop, test, and demonstrate a wide range of new processes, products and services in the audiovisual and multimedia sectors. Given the transversality of these processes, products and services, mobilizing effects are also expected in other important sectors, such as culture, specifically in cultural heritage, archives, books, and publications or the performing arts. A wide range of activities will enable the development of technology and solutions for three main areas: (1) Open platforms for managing the production and distribution of digital content in the cloud. (2) Management of contents belonging to the national cultural heritage based on open systems of preservation and interaction. (3) Creation, production and consumption of content, focusing on Quality of Service and Experience, using immersive environments and high definition. The CCG, through the applied research domains CVIG and PIU, is involved in this third chapter of activities, namely: (1) in the development of algorithms and tools that allow defining the narrative in immersive content (360 videos and virtual reality) and in the investigation of spatial reference techniques to highlight points of interest in 360 environments; (2) in the study and development of a new paradigm for the book, through the application of concepts of Physical Computing, Augmented Reality, IOT, in the sense of creating a "hybrid book". The CCG contribution is also focused on the scientific management of these activities and in the dissemination of the results achieved. | Immersive Virtual Environments, Auralization Systems, 360º Video, Sound 3D, Audiovisual production | CCG, MOG, INESC-TEC, Gema, Altice Labs, Jornal de Notícias, Cluster Media Labs, … | https://chic.mog-technologies.com/ | Nelson Alves | |

| CCG | TexBoost | TexBoost – less Commodities more Specialties | National | 2017 | 2020 | The TexBoost has as objective is to cluster collective R & D initiatives with a strong inductor and demonstrator component, involving Textile and Clothing companies, as well as other complementary sectors of the economy. The CCG – Centre for Computer Graphics – is involved in the PPS phase 1 of the project, more specifically in nuclear activity 1, which includes the technological development in the scope of digitization and dematerialization of tissue samples. The applied research domain EPMQ of the CCG is responsible for the research and development of a computer tool for dematerialization of tissue samples that will comprise the conceptualization and implementation of a digital platform for integration, treatment, and analysis of decision support data in the virtual prototyping of fabrics. For this purpose, we will use approaches applied to the development of interoperability architectures and BigData and Machine Learning algorithms. These algorithms are able to learn from the history of textile data, as well as to predict and optimize variables/parameters useful for decision-making processes in textile production. | BigData, MachineLearning, desmaterialization, fabrics | RioPele, Citeve | https://www.texboost.pt | Ana Lima | |

| CCG, VICOMTECH | 5G-Mobix | 5G for cooperative & connected automated MOBIility on X-border corridors | H2020 | 2019 | 2021 | 5G-MOBIX aims at executing CCAM trials along x-border and urban corridors using 5G core technological innovations to qualify the 5G infrastructure and evaluate its benefits in the CCAM context as well as defining deployment scenarios and identifying and responding to standardisation and spectrum gaps. 5G-MOBIX will first define the critical scenarios needing advanced connectivity provided by 5G, and the required features to enable those advanced CCAM use cases. The matching between the advanced CCAM use cases and the expected benefit of 5G will be tested during trials on 5G corridors in different EU countries as well as China and Korea. Those trials will allow running evaluation and impact assessments and defining also business impacts and cost/benefit analysis. As a result of these evaluations and also internation consultations with the public and industry stakeholders, 5GMOBIX will propose views for new business opportunity for the 5G enabled CCAM and recommendations and options for the deployment. Also the 5G-MOBIX finding in term of technical requirements and operational conditions will allow to actively contribute to the standardisation and spectrum allocation activities. 5G-MOBIX will evaluate several CCAM use cases, advanced thanks to 5G next generation of Mobile Networks. Among the possible scenarios to be evaluated with the 5G technologies, 5G-MOBIX has raised the potential benefit of 5G with low reliable latency communication, enhanced mobile broadband, massive machine type communication and network slicing. Several automated mobility use cases are potential candidates to benefit and even more be enabled by the advanced features and performance of the 5G technologies, as for instance, but limited to: cooperative overtake, highway lane merging, truck platooning, valet parking, urban environment driving, road user detection, vehicle remote control, see through, HD map update, media & entertainment. | Cooperative connected automated mobility (CCAM), Communication networks, media, information society | 58 partners including Vicomtech and CCG | https://www.5g-mobix.com/ | Carlos Silva | |

| HPI | SensorAnalytics | Visual Analytics Tools for Sensor Data | Industry | 2015 | long-term research focus | The research project develops methods and tools for integrating sensor data generated by technical assets and plants. They support, for example, sensor-based monitoring and optimization of plant operations and maintenance processes on the basis of visual analytics techniques. Based on an easy-to-customize user interface, the framework allows for rapidly building Apps that access and visualize sensor data based on various 2D and 3D information visualization techniques. As a lightweight system layer, the approach can form part of almost any web-based IT solution that requires building blocks for managing, analyzing, and visualizing sensor data. | Sensor data, visual analytics, predictive maintenance, information visualization | HPI | https://www.hpi3d.de | Dr. Benjamin Hagedorn | office-doellner@hpi.uni-potsdam.de | |

| HPI | GeospatialAnalytics | Visual Analytics Tools for Geospatial Data | Industry | 2015 | long-term research focus | This research project develops a web-based, service-oriented platform for the management, visualization, and analysis of geospatial data and geo-referenced data. This platform serves as a key element for all applications that need a lightweight, state-of-the-art visual geo-spatial information management. The platforms offers tools that assist users in handling and querying geo-spatial information. | Geospatial visual analytics, 2D geodata, 3D geodata, geo-referenced data, city models | HPI | https://www.hpi3d.de | Dr. Benjamin Hagedorn | office-doellner@hpi.uni-potsdam.de | |

| HPI | AVA | Automated Video Abstraction | Industry | 2015 | 2019 | This research project develops a web-based, service-oriented platform for abstracting digital videos. The functionality allows us to analyze, shorten, summarize, and stylize videos. It addresses fields such as artistic image and video abstraction, visual story telling, and video summarization. Its implementation is based on state-of-the-art smart-graphics and visual-computing solutions using extended neural networks and deep-learning based style transfer. Its cross-platform implementation operates on all common devices and operating systems. | Neural style transfer, digital video, smart graphics, user-generated contents, smart media | HPI | https://www.hpi3d.de | Dr. Matthias Trapp | office-doellner@hpi.uni-potsdam.de | |

| HPI | MOBIE | Mobility Analytics | Industry | 2015 | 2019 | This research projects is focussed on mobility information based on all kinds of networks for streets, roads, public transport or other forms of multimodal bility. The framework allows us to compute "reachability" of targets (e.g., shops, hospitals, schools) for given networks with respect to an entire spatial area (e.g., a city). | Mobility, network analysis, reachability, road networks, public transportation | HPI | https://www.hpi3d.de | Dr. Matthias Trapp | office-doellner@hpi.uni-potsdam.de | |

| HPI | VAA | Visual Analytics | Industry | 2017 | 2021 | This research project develops a web-based, service-oriented platform for visual business asset analytics. The term "business asset" includes any entity of value to a company (e.g., stocks, real estate, intellectual properties, store networks), each characterized by specific attributes. Heterogeneous, multi-dimensional business asset data from different sources is integrated into a unified, high-dimensional information space and then analyzed using multivariate visualization techniques to identify patterns, clusters, outliers, and correlations amongst multiple attributes. By incorporating the user’s domain knowledge and intuition into the visualization using customizable functions that represent domain-specific metrics and hypothesis, insights into business asset data can be obtained. The platform targets a broad range of clients (e.g. investment, consulting, financial services companies) seeking to quickly assess the value of potential investments and make informed decisions based on comprehensive, relevant data. | Business assets, visual analytics, financial services, risk analysis, multivariat information visualization | HPI | https://www.hpi3d.de | Prof. Dr. Jürgen Döllner | office-doellner@hpi.uni-potsdam.de | |

| HPI | 3DPW | 3D Point Clouds | Industry | 2017 | 2021 | This research project investigates and develops a technology platform that enable IT solutions and IT products to use 3D point clouds such as created by UAVs, laser scanners, and stereo photography. The platform provides IT building blocks in fields such as construction industry, disaster management, forestry, ensurance, and environmental monitoring. 3D point clouds as most important form of "digital twins" for physical objects, sites, and landscapes can be efficiently handled, including multi-temporal 3D point clouds to detect changes over time. | 3D Geodata, Geospatial Data, UAV, Laser Scan, Remote Sensing, LiDAR, Point Clouds, Photogrammetry | HPI | https://www.hpi3d.de | Prof. Dr. Jürgen Döllner | office-doellner@hpi.uni-potsdam.de | |

| HPI | TreeMaps | Hierarchical Information Visualization based on Tree Maps | Industry | 2017 | 2021 | This research project provides a framework for interactive tree maps used to visualize hierarchical data. It provides scalable visualization technology able to handle even massive data sets. Tree maps can be used in a number of domains, for example for software code (software maps), business objects (business maps) or any other kind of hierarchical data. As a unique feature, the framework supports 3D treemaps, providing an additional degree of freedom for the visual mapping of multidimensional hierarchical data. | Tree Map, Hierarchical Information Visualization | HPI | https://www.hpi3d.de | Prof. Dr. Jürgen Döllner | office-doellner@hpi.uni-potsdam.de | |

| HHI | Content4All | Personalised Content Creation for the Deaf Community in a Connected Digital Single Market | H2020 | 2017 | 2020 | The project Content4All aims at making content more accessible for the sign language community by implementing an automatic sign-translation workflow with a photo-realistic 3D human avatar. The final result will enable low-cost personalization of content for deaf viewers, without causing disruption for hearing viewers. Fraunhofer HHI is responsible for the generation and development of a novel hybrid human body model that allows for photo-realistic, real-time rendering and animation. Accordingly, covering all the aspects of model creation, capture, animation, and rendering: Exploiting innovative capturing technologies, in order to reproduce fine details (e.g. finger, hands for sign language animation) with limited modeling effort; i.e. fusing rough body models for semantic animation with video samples as dynamic textures. Integrating novel rendering methods that combine standard geometry-based computer graphics rendering with warping and blending of video textures. Investigating and developing new techniques for realistic and efficient transitions between the video-textures and the geometry motion. | language, sign language, translation, workflow, 3D, avatar | FINCONS University of Surrey Fraunhofer HHI Human Factors Consult (HFC) SWISS TXT Vlaamse Radio- en Televisieomroep (VRT) | https://www.hhi.fraunhofer.de/en/departments/vit/research-groups/computer-vision- graphics/projects.html | Prof. Dr.-Ing. Peter Eisert | peter.eisert@hhi.fraunhofer.de | |

| HHI | Replicate | cReative-asset harvEsting PipeLine to Inspire Collective-AuThoring and Experimentation | H2020 | 2016 | 2018 | REPLICATE‘s main goal is to stimulate and support collaborative creativity for everyone anywhere and anytime (ubiquitous co-creativity). To achieve this goal, the project will address different aspects that currently complicate the process of content creation and collaborative co-creation. REPLICATE aims to build upon leading research into the use of Smartphones and their sensors to deliver robust image-based 3D reconstruction of objects and their surroundings via highly visual, tactile and haptic user interfaces. In order to deliver real-time, interactive tools for high-quality 3D asset production, the project will balance device-based vs. cloud-based computational loading, rather than simply replacing regular computers by mobile devices. In this way, REPLICATE will facilitate everyone to take part in the creative process, anywhere and anytime, through a seamless user experience, ranging from the capturing of the real-world, modifying and adjusting objects for flexible usage, and then finally repurposing them via co-creative, MR workspaces to form novel creative media or through physical expression via rapid prototyping. | content, media, creation, creativity, interactive, 3D production, | AnimalVegetableMineral, gameware, Fondazione Bruno Kessler, t2i, ETH Zürich, wikitude, | https://www.hhi.fraunhofer.de/en/departments/vit/research-groups/computer-vision- graphics/projects.html | Prof. Dr.-Ing. Peter Eisert | peter.eisert@hhi.fraunhofer.de | |

| HHI | M3D | Mobile 3D Capturing and 3D Printing for Industrial Applications | National | 2016 | 2018 | The M3D project aims at improving currently established workflows handling spare parts for specialized industrial machines by exploiting recent advancements in the area of 3D reconstruction, object identification and 3D printing. Any spare part service attempts to achieve: “Getting the right part to the right place at the right time and the right cost”. In the case of specialized and complex mechanical appliance (e.g. train or car) this process typically involves highly qualified expertise for the unique part identification, for potentially required engineering steps and for logistics. Nevertheless, customers demand immediate response and solution to their needs when maintaining or repairing such devices. Therefore, the objective is to accelerate the spare part identification, ordering and delivery by improving the major parts of the workflow: Fast, easy-to-use and accurate on-the-spot 3D scan and reconstruction of the required spare part using a mobile solution. Automated, easy-to-use and reliable on-the-spot part identification using the previously acquired 3D data by searching through a CAD-database. Improved methods for manufacturing (e.g. 3D printing) the required spare parts by using available CAD data or the 3D scan data. Fraunhofer HHI is responsible for the project management and is furthermore involved with two groups. The Computer Vision and Graphics group will contribute their expertise on multi-view 3D reconstruction and will create an image-based mobile 3D reconstruction workflow for the subsequent identification of the object in large databases. The reconstruction process will especially address the complex capturing environment as well as challenging object characteristics. While the acquisition will be performed on the mobile platform, computationally demanding tasks will be moved to the cloud. In order to assist the user during the acquisition, a mobile user interface will be developed that provides the user with visual feedback on the one hand and allows him to provide additional information guiding the reconstruction process on the other. The Embedded System Group will develop a computing platform based on heterogeneous processing architecture concepts incorporating HW accelerators to process the 3D reconstruction in the cloud. | workflow, machines, industry, spares, 3D | https://www.3it-berlin.de/projects/m3d/ | Prof. Dr.-Ing. Peter Eisert | peter.eisert@hhi.fraunhofer.de | |

| HHI | EASY-COHMO | Ergonomics Assistance Systems for Contactless Human-Machine-Operation | National | 2017 | 2019 | The collaborative project EASY-COHMO of the 3DSensation consortium targets the exploration and development of operational concepts for human-machine interaction and human-machine cooperation, seamlessly integrated in different working contexts. As a part of this general objective, this subproject addresses the object- and room-stable augmentation of the environment or working space. A context sensitive spatial presentation of additional information and visualizations of three-dimensional data is supporting the user and allows the machine to communicate its status. The subproject on the one hand includes analysis components to capture the augmented environment and on the other hand the processing and presentation of the displayed content. This includes a perspective distortion of the content to guaranty a correct displaying independent of the augmented area, which can potentially be the complete working space of the user. To show information at the correct position in space, head mounted displays and projector-based systems, following the concept of spatial augmented reality, are used. As the application domain of the different industry partners differs very much in terms of the working environment and the displayed data (e.g. workflow information in production halls or atlas data of a patient) all algorithms are designed as generic as possible. This allows a custom configuration and adaption matching the individual conditions. | HMI, HMC, AR, working space, HMD, industry | https://www.hhi.fraunhofer.de/abteilungen/vit/projekte/easy-cohmo.html | Prof. Dr.-Ing. Peter Eisert | peter.eisert@hhi.fraunhofer.de | ||

| HHI | Ananas | Anomaly detection to prevent attacks on facial recognition systems | National | 2016 | 2019 | Identification tasks with identity cards, passports and visa are often performed automatically by biometric face recognition systems. Criminals can trick these systems, such that two people can use the same passport for authentication. This attack (morphing attack) is performed by fusing two face images to a synthetic face image that contains characteristics of both people. Using this image on a passport, both people are authenticated by a biometric face recognition system. The objective of ANANAS is to analyze the risk of morphing attacks and develop methods to detect them. The research on morphing attack detection methods in ANANAS is highly diversified focusing on semantic image content, camera characteristics and biometrics. The combination of methods from these different concepts of forensic image analysis promises an important toolset for governmental authorities to ensure and rate the trustworthiness of face images, especially on sovereign documents. HHI’s contribution to the project concerns two tasks: Management of an evaluation dataset with original face images and morphing attacks and development of new morphing attacks, Development of morphing attack detection methods based on semantic image content. | biometric, face recognition, attacks, trustworthiness | Otto-von-Guericke-Universität Magdeburg Bundesdruckerei GmbH, Berlin DERMALOG Identification Systems GmbH, Hamburg Fraunhofer-Institut für Nachrichtentechnik Heinrich-Hertz-Institut (HHI), Berlin Fraunhofer-Institut für Produktionsanlagen und Konstruktionstechnik (IPK), Berlin | www.forschung-it-sicherheit-kommunikationssysteme.de/projekte/ananas | Prof. Dr.-Ing. Peter Eisert | peter.eisert@hhi.fraunhofer.de | |

| LIST | EBSF 2 | European Bus System of the Future 2 | H2020 | 2015 | 2018 | The European Bus System of the Future 2 (EBSF_2) project is led by UITP and co-funded by the European Union’s Horizon 2020 research and innovation programme. The project (May 2015 – April 2018) capitalizes on the results of the previous EBSF project (September 2008 - April 2013). As the former, EBSF_2 is aimed at developing a new generation of urban bus systems by means of new vehicle technologies and infrastructures in combination with operational best practices, and testing them in operating scenarios within several European bus networks. The distinctive factor of EBSF_2 is the consortium’s ambition to improve the image of the bus through solutions for increased efficiency of the system, mainly in terms of energy consumption and operational costs, as required by today’s economic situation. The need for more cost-effective and energy efficient bus systems has led to the identification of a set of technological innovations and strategies with a strong potential to optimize mainly energy and thermal management of buses (in particular auxiliaries such as climate systems), green driver assistance systems, intelligent garage and maintenance processes, IT standard equipment and services. Moreover, to effectively address the need to move quickly from laboratory research to actual innovation of the bus fleets in operation in Europe, the solutions to be tested have been selected according to their technological maturity (and not only because of their potentiality) in order to ensure a short step for commercialisation after the end of the project. The use of simulators and prototypes has been conceived as a preliminary step for the validation of the innovations in real operational scenarios, performed also within the project, or as a necessary task to prove the potential of more futuristic solutions currently implemented at early stage of development (e.g. modular bus). The commitment of the main European bus manufacturers combined with the presence of top-leaders suppliers and large operators with world-wide experience lead to expect that the results of the project will have an important impact on the urban bus services. Their joint collaboration is believed to produce high quality products for the next bus generation, strengthening the leading role in the world of the European bus industry and paving the way for the possible development of standard concepts. As the main impact of EBSF_2, the partners expect to observe the overall increase in the attractiveness of bus systems and the economic benefit with regard to the total operational costs. | public transport, bus, urban, energy | https://www.ebsf2.eu | Dr. Laurent Lucat | laurent.lucat@cea.fr | |

| LIST | ASGARD | Analysis System for Gathered Raw Data | H2020 | 2016 | 2019 | ASGARD aims to contribute to LEA Technological Autonomy, by building a sustainable, long-lasting community for law enforcement agencies (LEAs) and the R&D industry. This community will develop, maintain and evolve a best-of-class tool set for the extraction, fusion, exchange and analysis of Big Data, including cyber-offense data for forensic investigation. ASGARD will help LEAs to significantly increase their analytical capabilities. Forensics being a focus of ASGARD, both intelligence and foresight dimensions are addressed by the project. Specific challenge: the availability of petabytes of on-line and off-line information being open to the public owned by the Law Enforcement Agencies (LEA), such as police forces and/or custom authorities or the result of the investigation of a (cyber-) offence, represents a valuable resource but also a management challenge. Access to huge amounts of data, structured (data-bases), unstructured (multilingual text, multimedia), semi-structured (HTML, XML, etc.), heterogeneous data collected by LEA sensors such as Video, Audio, GSM and GPS, all possibly obfuscated or anonymized, available locally or over private LEA owned/shared networks or over the Internet, can easily result in an information overload and represent a problem instead of a useful asset. | law inforcement, security, big data, forensic | https://www.asgard-project.eu | Dr. Laurent Lucat | laurent.lucat@cea.fr | ||

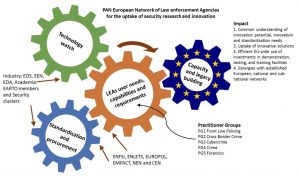

| LIST | I-LEAD | Innovation - Law Enforcement Agency's dialogue | H2020 | 2018 | 2020 | i-LEAD’s focus is on the incapability of groups of operational Law Enforcement Agencies (LEA) practitioners defining their needs for innovation. This will be done in a methodological way, also with the help of the research & industrial partners supplemented by a broad range of committed stakeholders. i-LEAD will build the capacity to monitor the security research and technology market in order to ensure a better matching and uptake of innovations by law enforcement agencies with the overarching aim to make it a sustainable Pan-Europan LEA network. Earlier funded European research with a high technology readiness level as well as pipeline technologies will be closely monitored and assessed on its usefulness. Where possible, a strong dissemination towards the ENLETS and ENFSI members will take place to enable them to take up the actions from this research. i-LEAD will indicate priorities in five practitioner groups as well as aspects that needs (more) standardization and formulate recommendations how to incorporate these in procedures. As a final step, i-LEAD will make recommendations to LEA members on how to use Pre-Commercial Procurement PCP) and Public Procurement of Innovation (PPI) instruments. | law inforcement, security | https://www.i-lead.eu | Dr. Laurent Lucat | laurent.lucat@cea.fr | |

| LIST | AUTOPILOT | AUTOmated driving Progressed by Internet Of Things | H2020 | 2017 | 2019 | Automated driving is expected to increase safety, provide more comfort and create many new business opportunities for mobility services. The market size is expected to grow gradually reaching 50% of the market in 2035. The IoT is about enabling connections between objects or ""things""; it’s about connecting anything, anytime, anyplace, using any service over any network. There is little doubt that these vehicles will be part of the IoT revolution. Indeed, connectivity and IoT have the capacity for disruptive impacts on highly and fully automated driving along all value chains towards a global vision of Smart Anything Everywhere. In order to stay competitive, the European automotive industry is investing in connected and automated driving with cars becoming moving “objects” in an IoT ecosystem eventually participating in BigData for Mobility. AUTOPILOT brings IoT into the automotive world to transform connected vehicles into highly and fully automated vehicle. The well-balanced AUTOPILOT consortium represents all relevant areas of the IoT eco-system. IoT open vehicle platform and an IoT architecture will be developed based on the existing and forthcoming standards as well as open source and vendor solutions. Thanks to AUTOPILOT, the IoT eco-system will involve vehicles, road infrastructure and surrounding objects in the IoT, with a particular attention to safety critical aspects of automated driving. AUTOPILOT will develop new services on top of IoT to involve autonomous driving vehicles, like autonomous car sharing, automated parking, or enhanced digital dynamic maps to allow fully autonomous driving. AUTOPILOT IoT enabled autonomous driving cars will be tested, in real conditions, at four permanent large scale pilot sites in Finland, France, Netherlands and Italy, whose test results will allow multi-criteria evaluations (Technical, user, business, legal) of the IoT impact on pushing the level of autonomous driving. | automotive, autonomous, safety, IoT, automation | https://www.autopilot-project.eu | Dr. Laurent Lucat | laurent.lucat@cea.fr | ||

| LIST | EmoSpaces | Enhanced Affective Wellbeing based on Emotion Technologies for adapting IoT spaces | H2020 | 2016 | 2019 | The Internet of Things (IoT) has evolved from being a far-fetched futuristic vision to something that can realistically be expected to become a mainstream concept in a few years’ time. EmoSpaces’ goal is the development of an IoT platform that determines context awareness with a focus on sentiment and emotion recognition and ambient adaptation. The main innovative aspect of EmoSpaces lies in considering emotion and sentiments as a context source for improving intelligent services in IoT. | IoT, intelligent environments, context awareness | https://www.itea3.org/project/emospaces.html | Dr. Laurent Lucat | laurent.lucat@cea.fr | ||

| DFKI, HHI, VICOMTECH | LUMINOUS | Language Augmentation for XR Worlds | HE | 2024 | 2026 | LUMINOUS aims at the creation of the next generation of Language Augmented XR systems, where natural language-based communication and Multimodal Large Language Models (MLLM) enable adaptation to individual, not predefined user needs and unseen environments. This will enable future XR users to interact fluently with their environment, while having instant access to constantly updated global as well as domain- specific knowledge sources to accomplish novel tasks. We aim to exploit MLLMs injected with domain specific knowledge for describing novel tasks on user demand. These are then communicated through a speech interface and/or a task adaptable avatar (e.g., coach/teacher) in terms of different visual aids and procedural steps for the accomplishment of the task. Language driven specification of the style, facial expressions, and specific attitudes of virtual avatars will facilitate generalisable and situation-aware communication in multiple use cases and different sectors. LLMs will benefit in parallel in identifying new objects that were not part of their training data and then describing them in a way that they become visually recognizable. Our results will be prototyped and tested in three pilots, focussing on neurorehabilitation (support of stroke patients with language impairments), immersive industrial safety training, and 3D architectural design review. A consortium of six leading R&D institutes experts in six different disciplines (AI, Augmented Vision, NLP, Computer Graphics, Neurorehabilitation, Ethics) will follow a challenging workplan, aiming to bring about a new era at the crossroads of two of the most promising current technological developments (LLM/AI and XR), made in Europe. | AI, Augmented Vision, NLP, Computer Graphics, Neurorehabilitation, Ethics | 12 partners from 7 European countries | to be announced. | Prof. Didier Stricker, DFKI | didier.stricker@dfki.de |

| DFKI, VICOMTECH | INFINITY | Revolutionising data-driven investigations | HE | 2020 | 2023 | In today’s data-driven society, criminals are preying on the information systems of private, public, corporate and government networks. The fight against cybercrime, terrorism and other hybrid threats hinges on technological and policy innovation. The EU-funded INFINITY project will couple virtual and augmented reality innovations, artificial intelligence and machine learning with big data and visual analytics. Its aim will be to deliver an integrated solution to revolutionise data-driven investigations. The project will address the core needs of contemporary law enforcement by equipping investigators with cutting-edge tools to acquire, process, visualise and act upon the enormous quantities of data they are faced with every day. It will assist law enforcement agencies with automated systems and instinctive interfaces and controls. | Security, immersive, interactive, collaborative, AI, Big Data, decision support | 20 entities from 11 countries | https://h2020-infinity.eu/ | --- | infinity@shu.ac.uk |

| DFKI, VICOMTECH | dAIEDGE | A network of excellence for distributed, trustworthy, efficient and scalable AI at the Edge | HE | 2023 | 2026 | The proposal focuses on the Next Generation AI topic of the call HORIZON-CL4-2022-HUMAN-02-02. The vision of dAIEDGE Network of Excellence (NoE) is to strengthen and support the development of the dynamic European edge and distributed Artificial Intelligence (AI) ecosystem as an essential ingredient in the growth and competitiveness of European industrial sectors. The dAIEDGE Network aims to reinforce the research and innovation value chains to accelerate the digital and green transitions through advanced edge AI technologies, applications, and innovations, building on Europe's existing assets and industrial strengths. In parallel, it will fortify the edge AI research and industrial communities through technological developments beyond state of the art and become a dependable and strategic pillar for the European AI Lighthouse. This will be achieved by mobilising and connecting the European AI and edge AI constituency, the relevant stakeholders, European partnerships, and projects, to provide roadmaps, guidelines and trends supporting the next-generation edge AI technologies. The key aim is to support and ensure rapid development, market uptake and open strategic sovereignty for Europe in the critical technologies for distributed edge AI (hardware, software, frameworks, tools). The dAIEDGE NoE will play a catalyst role in building a solid edge AI virtual network of research facilities and laboratories to benefit the European research and industrial community. The NoE multidisciplinary concept provide an arena for matchmaking, exchanging ideas, tools, and services, by bringing together the leading research centres, AI-on-demand platforms, digital innovation hubs, AI projects and initiatives. The ultimate goal for the dAIEDGE NoE is to support Europe to become a global centre of excellence with unique human-centred edge AI competence addressing the social and economic challenges and the needs of the citizens and society. | Edge, AI, ecosystems, innovation management, ecosystems | 36 entities from 15 countries | https://daiedge.eu/ | Dr.-Ing. Alain Pagani | Alain.Pagani@dfki.de |